lbls = np.random.randint(0, 2, size=(10)) # Dataset of size 10 (train=8, valid=2)

is_valid = lambda i: i >= 8

dblock = DataBlock(blocks=[CategoryBlock],

getters=[lambda i: lbls[i]], splitter=FuncSplitter(is_valid))

dset = dblock.datasets(list(range(10)))

item_tfms = [ToTensor()]

wgts = range(8) # len(wgts) == 8

dls = dset.weighted_dataloaders(bs=1, wgts=wgts, after_item=item_tfms)Data Callbacks

Callbacks which work with a learner’s data

CollectDataCallback

def CollectDataCallback(

after_create:NoneType=None, before_fit:NoneType=None, before_epoch:NoneType=None, before_train:NoneType=None,

before_batch:NoneType=None, after_pred:NoneType=None, after_loss:NoneType=None, before_backward:NoneType=None,

after_cancel_backward:NoneType=None, after_backward:NoneType=None, before_step:NoneType=None,

after_cancel_step:NoneType=None, after_step:NoneType=None, after_cancel_batch:NoneType=None,

after_batch:NoneType=None, after_cancel_train:NoneType=None, after_train:NoneType=None,

before_validate:NoneType=None, after_cancel_validate:NoneType=None, after_validate:NoneType=None,

after_cancel_epoch:NoneType=None, after_epoch:NoneType=None, after_cancel_fit:NoneType=None,

after_fit:NoneType=None

):

Collect all batches, along with pred and loss, into self.data. Mainly for testing

WeightedDL

def WeightedDL(

dataset:NoneType=None, # Map- or iterable-style dataset from which to load the data

bs:NoneType=None, # Size of batch

wgts:NoneType=None, shuffle:bool=False, # Whether to shuffle data

num_workers:int=None, # Number of CPU cores to use in parallel (default: All available up to 16)

verbose:bool=False, # Whether to print verbose logs

do_setup:bool=True, # Whether to run `setup()` for batch transform(s)

pin_memory:bool=False, timeout:int=0, batch_size:NoneType=None, drop_last:bool=False, indexed:NoneType=None,

n:NoneType=None, device:NoneType=None, persistent_workers:bool=False, pin_memory_device:str='',

wif:NoneType=None, before_iter:NoneType=None, after_item:NoneType=None, before_batch:NoneType=None,

after_batch:NoneType=None, after_iter:NoneType=None, create_batches:NoneType=None, create_item:NoneType=None,

create_batch:NoneType=None, retain:NoneType=None, get_idxs:NoneType=None, sample:NoneType=None,

shuffle_fn:NoneType=None, do_batch:NoneType=None

):

Weighted dataloader where wgts is used for the training set only

Datasets.weighted_dataloaders

def weighted_dataloaders(

wgts, bs:int=64, shuffle_train:bool=None, # (Deprecated, use `shuffle`) Shuffle training [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

shuffle:bool=True, # Shuffle training [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

val_shuffle:bool=False, # Shuffle validation [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

n:int=None, # Size of [`Datasets`](https://docs.fast.ai/data.core.html#datasets) used to create [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

path:str | Path='.', # Path to put in [`DataLoaders`](https://docs.fast.ai/data.core.html#dataloaders)

dl_type:TfmdDL=None, # Type of [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

dl_kwargs:list=None, # List of kwargs to pass to individual [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)s

device:torch.device=None, # Device to put [`DataLoaders`](https://docs.fast.ai/data.core.html#dataloaders)

drop_last:bool=None, # Drop last incomplete batch, defaults to `shuffle`

val_bs:int=None, # Validation batch size, defaults to `bs`

):

Create a weighted dataloader WeightedDL with wgts for the training set

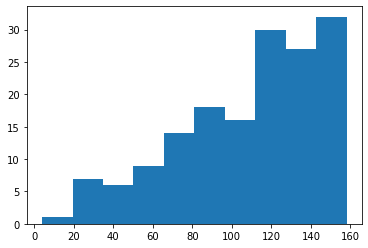

dls.show_batch() # if len(wgts) != 8, this will fail"1n = 160

dsets = Datasets(torch.arange(n).float())

dls = dsets.weighted_dataloaders(wgts=range(n), bs=16)

learn = synth_learner(data=dls, cbs=CollectDataCallback)learn.fit(1)

t = concat(*learn.collect_data.data.itemgot(0,0))

plt.hist(t.numpy());[0, nan, None, '00:00']

DataBlock.weighted_dataloaders

def weighted_dataloaders(

source, wgts, bs:int=64, verbose:bool=False,

shuffle_train:bool=None, # (Deprecated, use `shuffle`) Shuffle training [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

shuffle:bool=True, # Shuffle training [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

val_shuffle:bool=False, # Shuffle validation [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

n:int=None, # Size of [`Datasets`](https://docs.fast.ai/data.core.html#datasets) used to create [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

path:str | Path='.', # Path to put in [`DataLoaders`](https://docs.fast.ai/data.core.html#dataloaders)

dl_type:TfmdDL=None, # Type of [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

dl_kwargs:list=None, # List of kwargs to pass to individual [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)s

device:torch.device=None, # Device to put [`DataLoaders`](https://docs.fast.ai/data.core.html#dataloaders)

drop_last:bool=None, # Drop last incomplete batch, defaults to `shuffle`

val_bs:int=None, # Validation batch size, defaults to `bs`

):

Create a weighted dataloader WeightedDL with wgts for the dataset

dls = dblock.weighted_dataloaders(list(range(10)), wgts, bs=1)

dls.show_batch()0PartialDL

def PartialDL(

dataset:NoneType=None, # Map- or iterable-style dataset from which to load the data

bs:NoneType=None, # Size of batch

partial_n:NoneType=None, shuffle:bool=False, # Whether to shuffle data

num_workers:int=None, # Number of CPU cores to use in parallel (default: All available up to 16)

verbose:bool=False, # Whether to print verbose logs

do_setup:bool=True, # Whether to run `setup()` for batch transform(s)

pin_memory:bool=False, timeout:int=0, batch_size:NoneType=None, drop_last:bool=False, indexed:NoneType=None,

n:NoneType=None, device:NoneType=None, persistent_workers:bool=False, pin_memory_device:str='',

wif:NoneType=None, before_iter:NoneType=None, after_item:NoneType=None, before_batch:NoneType=None,

after_batch:NoneType=None, after_iter:NoneType=None, create_batches:NoneType=None, create_item:NoneType=None,

create_batch:NoneType=None, retain:NoneType=None, get_idxs:NoneType=None, sample:NoneType=None,

shuffle_fn:NoneType=None, do_batch:NoneType=None

):

Select randomly partial quantity of data at each epoch

FilteredBase.partial_dataloaders

def partial_dataloaders(

partial_n, bs:int=64, shuffle_train:bool=None, # (Deprecated, use `shuffle`) Shuffle training [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

shuffle:bool=True, # Shuffle training [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

val_shuffle:bool=False, # Shuffle validation [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

n:int=None, # Size of [`Datasets`](https://docs.fast.ai/data.core.html#datasets) used to create [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

path:str | Path='.', # Path to put in [`DataLoaders`](https://docs.fast.ai/data.core.html#dataloaders)

dl_type:TfmdDL=None, # Type of [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)

dl_kwargs:list=None, # List of kwargs to pass to individual [`DataLoader`](https://docs.fast.ai/data.load.html#dataloader)s

device:torch.device=None, # Device to put [`DataLoaders`](https://docs.fast.ai/data.core.html#dataloaders)

drop_last:bool=None, # Drop last incomplete batch, defaults to `shuffle`

val_bs:int=None, # Validation batch size, defaults to `bs`

):

Create a partial dataloader PartialDL for the training set

dls = dsets.partial_dataloaders(partial_n=32, bs=16)assert len(dls[0])==2

for batch in dls[0]:

assert len(batch[0])==16