from fastai.vision.all import *Captum

In all this notebook, we will use the following data:

path = untar_data(URLs.PETS)/'images'

fnames = get_image_files(path)

def is_cat(x): return x[0].isupper()

dls = ImageDataLoaders.from_name_func(

path, fnames, valid_pct=0.2, seed=42,

label_func=is_cat, item_tfms=Resize(128))from random import randintlearn = vision_learner(dls, resnet34, metrics=error_rate)

learn.fine_tune(1)Captum Interpretation

The Distill Article here provides a good overview of what baseline image to choose. We can try them one by one.

CaptumInterpretation

def CaptumInterpretation(

learn, cmap_name:str='custom blue', colors:NoneType=None, N:int=256,

methods:tuple=('original_image', 'heat_map'), signs:tuple=('all', 'positive'), outlier_perc:int=1

):

Captum Interpretation for Resnet

Interpretation

captum=CaptumInterpretation(learn)

idx=randint(0,len(fnames))

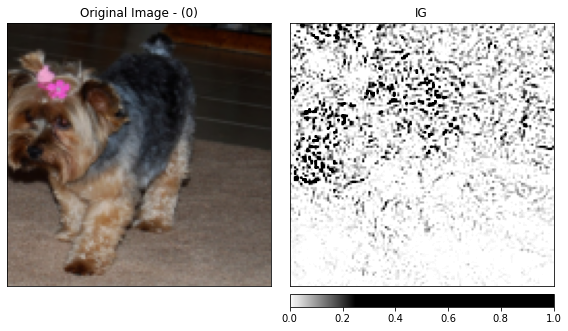

captum.visualize(fnames[idx])

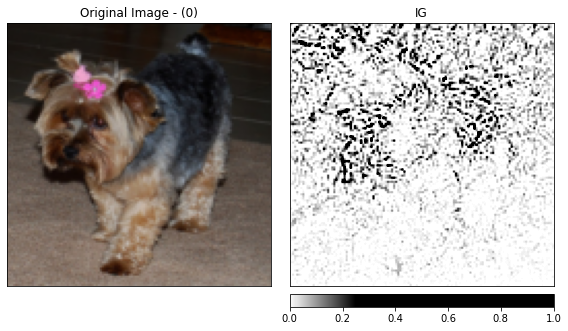

captum.visualize(fnames[idx],baseline_type='uniform')

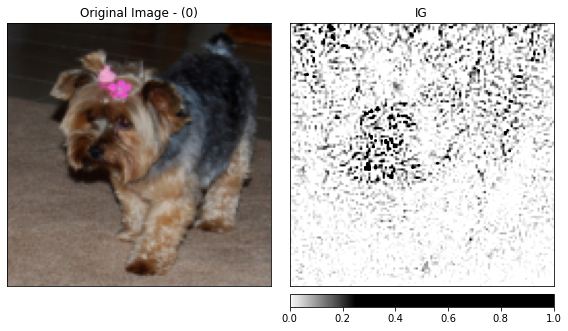

captum.visualize(fnames[idx],baseline_type='gauss')

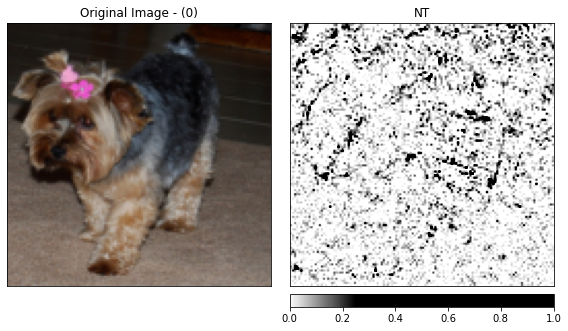

captum.visualize(fnames[idx],metric='NT',baseline_type='uniform')

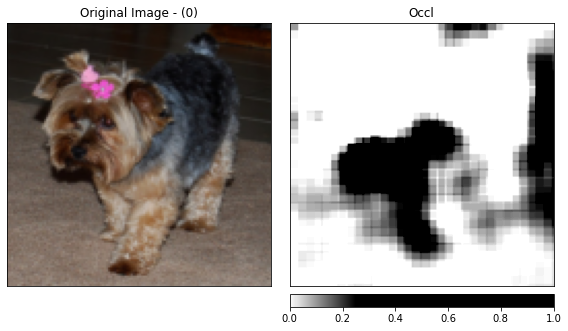

captum.visualize(fnames[idx],metric='Occl',baseline_type='gauss')

Captum Insights Callback

@patch

def _formatted_data_iter(x: CaptumInterpretation,dl,normalize_func):

dl_iter=iter(dl)

while True:

images,labels=next(dl_iter)

images=normalize_func.decode(images).to(dl.device)

yield Batch(inputs=images, labels=labels)CaptumInterpretation.insights

def insights(

x:CaptumInterpretation, inp_data, debug:bool=True

):

captum=CaptumInterpretation(learn)

captum.insights(fnames)